While I’ve procrastinated on this for some time, I finally got around to implementing a game feature this month. A big part of my game “True King” will be a turn-based strategy mode, held on a map grid between two armies, like “Fire Emblem” or “Final Fantasy – Tactics.” Or, more generally, like Chess or Checkers. I had already made a prototype where the enemy character AI moved randomly (see that article here from… 18 months ago?!?). Now, the enemy AI knows where your players are, and knows to move towards you, to create the illusion of a somewhat tactical army.

How does one create artificial intelligence for this type of thing? It’s a basic concept, and I’ll talk about how I did it.

First, boy it is not easy going back to code I’ve written over a year ago. It was ugly code to begin with, and I’ve gone out of my way to not rewrite it when changing how my AI behaves. There may be limitations I have to deal with later… and taking a few hours to understand how I implemented the old system was not a good use of time. Documentation is important, folks!

Anyway, one of the first big questions in AI 101 is typically this: how can I make a computer play chess? There’s a long history on the problem, with research as early as 1950, and decades later, programs smart enough to beat even the best human opponents. Today, machine learning AI can devise strategies by playing against itself millions of times for almost any strategy-based board game.

But that’s a bit too complex. Artificial intelligence, much to my disappointment, is not a magic trick. Professionals would label even a simple “Hello World” program as an AI, since a human might be convinced, even if for only a moment, that a computer that can say “hello” to a human must be smarter than simply a metal machine. Similarly, playing chess can be programmed by the following: the programmer defines rules for each piece (a pawn can move forward one step, a rook can move horizontally, etc.), and the AI would randomly pick one of its pieces at each turn and move them to a random location that qualifies as a legal move. If a selected piece cannot move, randomly choose a different piece that turn until you can. Et voilà. Your computer can play chess. Much worse than a human can, but technically valid.

Any subsequent tricks are to make your AI smarter, step by step. For example, if a chess piece can take out the other player’s piece in a given turn, then do so. This can be done with “if” statements to check if any valid moves for a piece would land on top of a piece of the opponent. Technically, my AI was already capable of this.

For chess, the next steps would be to “predict” the best current move, in order to make the opponent’s pieces more vulnerable for the next turn. This is a bit more tricky… generally, it can involve calculating every possible turn the computer can make, versus every turn the opponent can make in the next move, and determining what current computer move would statistically lead to the best chance of taking an opponent’s piece in the following move. For example, moving a rook two steps forward might lead to a 0.4% chance of taking an opponent’s piece the next turn, while moving a bishop 3 steps back might lead to a 0.26% chance. If a move could trap one of the opponent’s pieces, it should return a 100% chance. This could be refined to give higher weight to certain pieces (queen or king, for example). Strong AI wouldn’t look just one step ahead, but several. In terms of computation, this can create a tree of countless branches, a lot of processing power to calculate and store in memory. In reality, looking 3 or 4 turns ahead is already likely to beat most human opponents, and is likely as simple as a chess AI on your phone would be.

But what about programming the AI to “Fire Emblem?” In that game, players might have a restriction on how many grid cells away they can move, but there aren’t any complex patterns like in Chess. And a player tries to move near an opponent to attack it, requiring to attack over multiple turns to defeat an enemy unit. In some ways, this simplifies things: each AI unit can be treated independently, rather than having to calculate the best unit to move in a given turn. If attack patterns are fairly consistent (for me, likely always “can attack if one cell away” or “can attack if two cells away”), we can choose to move to a cell that number of cells away from an opponent.

But how do we know how to pick a cell that’s a certain number of cells away from an opponent? How can we even know how to move an AI unit towards an opponent AI? We use “pathfinding!”

Be it turn-based or real-time, pathfinding is one of the most common examples of AI in video games. It involves representing a traversable world into a connected graph of nodes. Even a Call of Duty map could be represented as one: you might have nodes at doorways, at corners or centers of rooms, or sprawled outside on stairways and ground terrain around buildings and cover. If their connections are defined, then you have defined paths that an AI could take to get from one given location to another (ignoring collision with other players or dynamic elements along a path). For a grid-based system, the idea of a graph is practically automatic.

But how do we calculate how to move from one location to another, preferably in the shortest distance possible? This relates to implementing a chess AI, even if moving a piece towards an enemy piece isn’t necessary for that AI to calculate the best move. Without delving too much into completeness and graph theory, there are a variety of existing algorithms to calculate this. The algorithm I used is the famous “Dijkstra’s Algorithm.”

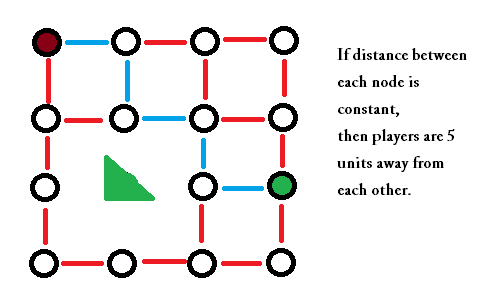

It’s difficult to explain in text how the algorithm works (Wikipedia has nice articles on pathfinding, including an animated gif to show Dijkstra in action). To start, assume we have a starting node, and want to find the shortest path to a target node. First, check closest neighbor nodes: on a chess board, if starting from a corner, there would be two neighbors. Add those two neighbors to a list, and record that the starting node has been visited (with a distance of 0) and so has the two neighbors (with a distance of 1). Now, repeat this for the nodes in the maintained list: check the neighbors of the first original neighbor. This includes the starting node, but since it was already visited (with a distance less than x + 1), we ignore it. Add brand new neighbors to the list and remove the node we were looking at from the list. If we repeat this, we should have a number for each node on the map, representing the shortest distance to get there from the starting node.

If you have a target node, you can stop when the target is reached, rather than searching the entire map. But how do you actually traverse the map to find how to move an AI along a path? Work backwards. Suppose the target node is found to be 8 units away from the start. Then check the neighbors of the target for a unit that is 7 units away. Then check for 6. Then 5. Soon, you’ll have a valid path that represents the shortest way to get there.

Perhaps you’ve heard of the “A* Algorithm?” It operates similarly, but expects an additional heuristic to help calculate most likely nodes along the final path rather than searching the entire map one node at a time. A common heuristic is literal distance. In Unity3D, you can use “Vector3.Distance” to get the distance between two objects. In addition to just using a list of nodes in order of how far they are from the starting node, A* can choose to give higher priority to calculate nodes based on their physical distance from the target. If there is a path that represents a straight line with no obstacles, A* can be much faster than Dijkstra’s to calculate.

So why don’t I use A*? Because I’m not calculating distance to a specific target: at each turn, I use Dijkstra’s to calculate the distance from a starting node (the AI’s position) to ALL cells on the map. After this, I can instantly determine the closest enemy, and a path to move towards it. If I have a grid of size n x n, with number of AI units being x, and number of opponent units being y, then this process takes a complexity of approximately O(((n^2) + y) * x). If instead using Dijkstra’s multiple times to calculate the shortest path to a target at the start, this would be a worst-case scenario of approximately (O(((n^2)*y)*x)). A* could be better in most cases, but still has the same worst-case complexity.

… wait. If I was just looking for the closest cell with an enemy on it, I could run Dijkstra’s without searching and saving the entire map, just searching until an enemy is found, which mathematically would be the closest one. But perhaps I don’t simply want to find the closest (I suggest why at the end of this post)… therefore this implementation is still a good choice.

I was hopeful that I could calculate the distance between cells at startup of the game, or even cache it into a file before compiling the game for a static map. Alas, I can’t, because moving players can block paths on more complex maps. So complete distance maps for each AI player must be calculated at each turn (just for the cells where each AI is located; not calculating the distance from every cell to every other cell on the map).

Does this implementation take a long time to compute? Surprisingly, it’s almost instant. Even pre-calculating for every cell at startup (for my case, a 12×10 grid) is really quick, only taking extra seconds to load and initialize the 3D Cel Animation character textures. It’s sobering to see how quickly it loads versus the main adventure map, where the entire forest and mountain-scape is loaded in memory as a whole…

Very good then. I have a rudimentary AI that can at least require a player to think a little when moving its army. It wouldn’t be hard to update the heuristic as to what player unit should be targeted: right now, an enemy AI just looks for the closest player. But what about the closest player that would require the least amount of attacks to kill? What about the a player closest on average to the opponents, to allow “ganging up” on a unit? What about a player that is closest to the enemy AI, but also farthest from the rest of its own units, to choose a most-likely target that won’t allow the player to gang-up back at it? There are a variety of possible strategies, which I plan to test to help create different difficulty levels, each without creating branching logic to predict future moves. By not creating a branching tree, the AI will likely never be so smart to give expert players a challenge, but I’ve never been enough of a geek to cater to that type of audience anyway. The amount of moves possible in any given turn will seem freeing enough to make such subtleties less of an issue.

The pieces are in place. A lot of smaller details need to be implemented, but I’m close to a genuine playable prototype of “True King” ‘s first 10 minutes of gameplay.