Ever since its reveal last month in August 2018, and likely for months following its release later in September 2018, the tech industry has been abuzz about Nvidia’s newest line of high-end graphics cards. Titled “RTX” instead of “GTX,” the “RTX” line is capable of better “ray tracing” performance than even specialized industry-level cards from only a year ago. But what is “ray tracing?” And more importantly, to answer the question everyone’s been talking about: how does the RTX line effect the viability of “3D Cel Animation?”

No one is asking about that? Oh… well, I’ll talk about what I understand ray tracing to be, anyway. Complete with hand-made diagrams of irregular quality.

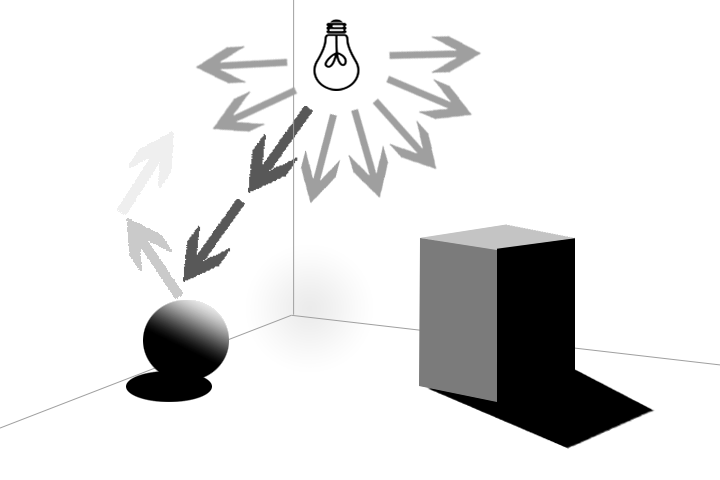

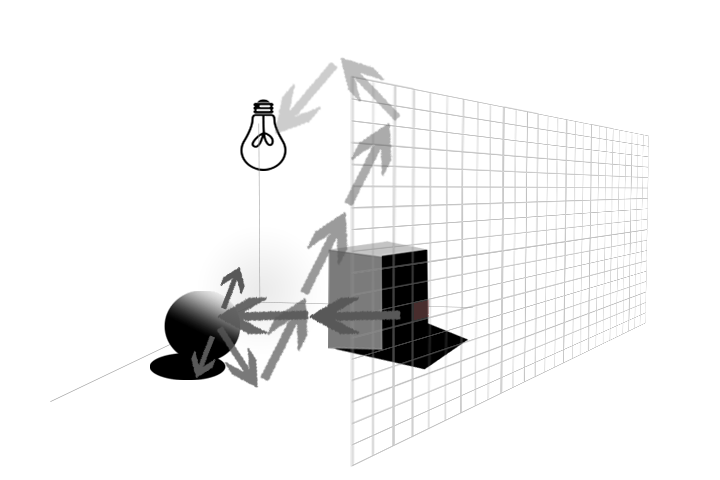

Firstly, raytracing is a new trend word about a graphics field that’s been around for decades. It generally refers to 3D lighting of 3D graphics. If you were to place a light source in a 3D scene, you might expect it to behave in a similar way to real-world light: light would extend as rays from the source and bounce on all the surfaces around it; if light hits a surface directly, it is lit with the highest amount of light, but if the light has to travel a far distance, then the light amount is reduced. Light can bounce off most surfaces at varying degrees, providing indirect lighting to other objects. See the above image.

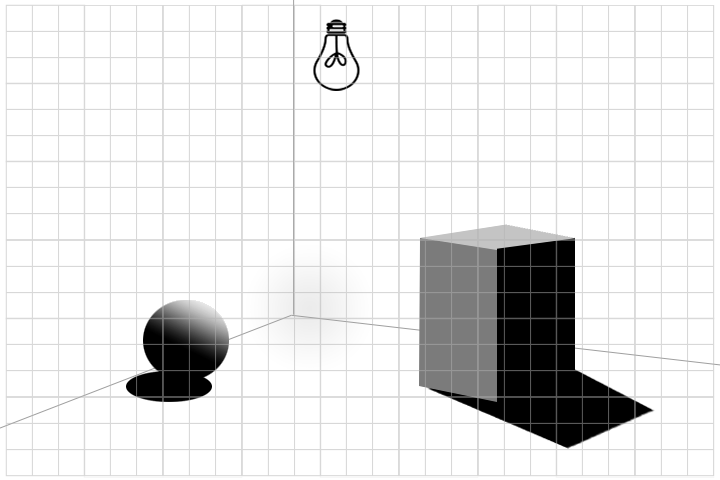

But one could draw an infinite amount of rays from a light source, making it unrealistic to calculate. It is much more practical to do the calculation in reverse: we know we are going to output the image to a screen for the viewer to see. Today, that would commonly be a 1920x1080p screen, which contains 2,073,600 pixels. So we would calculate lighting by shooting a ray out from each pixel, and bouncing it off from the first surface it hits to better calculate the lighting and shadow to render. If there was no real-time lighting, we could stop at simply getting the color/material data from that point where the surface was hit, but recursive raycasting would give us a better idea of what the lighting on that color would be.

Casting a ray from a pixel to a surface. Notice it can immediately cast a shadow and basic lighting on the first hit.

A ray can bounce indefinitely for indirect light, but parameters can be given to limit how many times it attempts to connect to a light source. Data at each surface can determine how much (if any) light would bounce back to the original surface. Notice that after the first hit, a test could be done to determine if there is a direct unblocked path from the light to the surface. This can give a quick estimate of lighting and shadows. If there are multiple light sources to check, then that shadow has to check all of them, or at least choose to check which is “closest” and use that. This is part of why having multiple light sources in a scene is more expensive than just having one, and why making those lights and objects “static” (not moving) can reduce that expense, as determining the closest light can be done just once at launch. It’s also why games can generally run faster if launched at a lower resolution.

This is what ray tracing is. Even though it is more efficient than real light behavior, ray tracing can still be really slow. Most 3D CGI movies use a form of ray tracing in Autodesk Maya (or other software) to render out images with realistic lighting. Even today (in 2018), companies like Pixar and Disney will claim to take over 24 hours to render a single frame of a 24fps movie, using massive server farms to finish rendering over 100,000 frames in total.

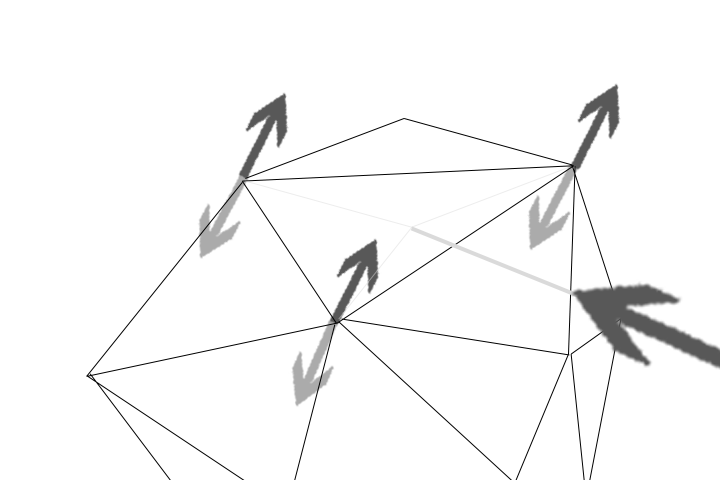

Nvidia mentioned in their reveal of the new RTX graphics cards (2070, 2080 and 2080 Ti announced as of this writing) that “rasterization” is commonly used today for real-time lighting in games. It’s hard to find much documentation on what this is exactly when referenced for lighting; generally, the term means reducing data to a finite set of elements that can be accessed more quickly after the calculation is already done. JPEG pictures are rasterized sets of pixels that represent what could have originally been a 3D scene, or a 2D vector image. From what I gather, “rasterization” for real-time lighting can utilize a variety of techniques, the most common being to render only a single object (one at a time) to calculate its lighting. Perhaps first doing this to calculate shadow, then render. Basically, it means doing what the above image shows, but without bouncing the ray repeatedly until directly hitting the light source. To improve efficiency, objects contain usable data at their vertices that can describe color, texture, and potentially, save lighting data: if a large square surface with 4 vertices is being lit, then the 4 vertices can complete a lighting calculation, and the rest of the surface can estimate the gradient. for the center This is a bit faster, although it also explains why having many objects in a scene, or complex objects with many vertices, can cause poor performance. If there was no lighting at all, I suspect it might even be faster to NOT use this rasterization technique with scenes of complex objects (scenes with more than 2 million triangles).

Rasterization has limitations on realism for shadows, and more importantly, indirect light and reflections, since the ray doesn’t bounce to see what object is nearby. A variety of solutions exist to try to estimate such details, but none come close to true “ray tracing.”

That is why Nvidia is hyped around its new RTX graphics cards: they claim to be capable of live, real-time ray tracing, which mostly just means more realistic reflections, indirect light and shadows. Some of the demos are pretty impressive, indistinguishable from a live-action image. But we’ve seen some pretty realistic-looking games even as early as the days of the PS3 and Xbox 360… I don’t know that most games will really need to take advantage of ray tracing to achieve such quality… IF developers know what they are doing. If ray tracing can be applied automatically without careful pre-calculation or light-mapping on textures, it would be easy for even indie developers and hobbyists to make games that look as good as a Hollywood film.

While Nvidia claims ray tracing is now possible in real time, some early reports suggest it does have a significant performance hit on large games with even minor uses of reflections (that it can do any ray tracing at all in less than 1 second is still impressive). I don’t know exactly how the graphics card works underneath… the company claims to have new AI-based cores to help improve the performance, something I suspect has an even greater effect than the RT cores on board thanks to prediction and pre-processing, meaning performance might not be consistent for all users at all times. I suspect researchers will be happy to get access to all components of the card to use in custom tests and simulations. If nothing else, the cards should be able to run old-school rasterization lighting a bit faster than older cards, just barely giving us 4k 30fps gaming for most AAA experiences.

Some of the above information might be incorrect, this is just my understanding of the topic. But how does all of this relate to my work with “3D Cel Animation?”

An important part of my animation techniques involve using “Physics.Raycast” (Unity3D API) at every frame to determine what 3D frame should be visible to portray a 2D character in 3D space. It requires physics colliders on every frame, which in itself is a computational mess. Raycasting is a subset operation of ray tracing, and Physics.Raycast (not using recursive bouncing) is more based on physics than it is on visual rendering. But the two aren’t too different, are they? Could RTX have significant computation improvements for Physics.Raycast? I don’t know how Physics.Raycast is working under the hood: is it possible it is using rasterization, where it checks ALL objects in a scene to determine what object a single ray hit? Would it be much faster for me to use a more direct form of ray tracing from the point of view of the character to render different body parts? Maybe. This is my biggest concern, thinking that with some well-written GPU programming I might be able to improve my animation techniques 100 fold (although GPU programming to save reference to objects rather than simply render them isn’t trivial). Comparing having noticeable slowdown with a few characters (let’s say 100 objects requiring raycasting per frame) to what a real-time ray tracing demo would need to do (2 million + recursive operations) makes me feel a lot could be done to improve my performance, perhaps moreso on the new GTX cards.

But without extra programming, even if Unity3D did update to use RTX for raycasting… 100 vs 2 million makes it seem like the difference wouldn’t work out to much. Like most programming problems, optimizing your code properly makes all the difference. And it isn’t easy to make games and optimize toolsets at the same time while working a separate full-time job. Finish next game first, then look at optimizations using GPU and improved user workflow, then next game, then more development improvements, then repeat…