This blog is about my journey as an indie game developer. But I do other things too: last year I finished my Master’s degree with a thesis on artificial intelligence. In my defense I talked about a new algorithm concept of my own design, and how it improved over existing implementations. I also secretly intended the design of this algorithm to be usable in my next game project, which I am currently working on.

So with this justification and with my last paper on the subject published, I will briefly talk about my Master’s research in the article, and why I think I may never take a job involving “academia research” again.

Firstly, my work requires some knowledge of “Evolutionary Algorithms.” These are algorithms that represented simplified evolution, typically by having things that represented solutions or subsets of solutions and evolving them.

Take for example, a trainer’s team of Pokémon (topical, eh?). A trainer may start with a random set of Pokémon they use to battle other trainers. Among other things, the trainer’s success in battle depends on the types of Pokémon (some elements are stronger against certain types), the ratio of attack/defense/health points, and so on. A trainer will probably start with a random set of Pokémon, but after collecting others will be able to customize their team better. Suppose the trainer had a handful of Pokémon teams and kept track of which teams performed the best. To build even better teams, the trainer can make new teams that are combinations of the best performing teams from the previous set.

This is what most Evolutionary Algorithms do. The algorithm will have a set of solutions randomly generated at the start. After seeing how successful each solution is, the algorithm combines the best-performing solutions and includes them in a new generation of solutions, along with additional randomized solutions. Even by just combining the best solutions and no other interference, the algorithm will show improvement with each successive generation. Ultimately, the programmer decides when to stop the algorithm and take the best solution so far.

This is a fascinating strategy, to use such simplified logic and rely on random combinations to achieve better results. For some complicated problems where a better strategy is difficult to come up with, these algorithms are a great alternative. Thousands of researchers have implemented thousands of variations on these for decades. There is even research in Evolutionary Programming to have software write itself, independent of the programmer.

(It is important to note that these algorithms are typically not used to simulate real evolution for biology or historical research – while it could and should, almost all existing research in Evolutionary Algorithms has been on optimization and problem-solving.)

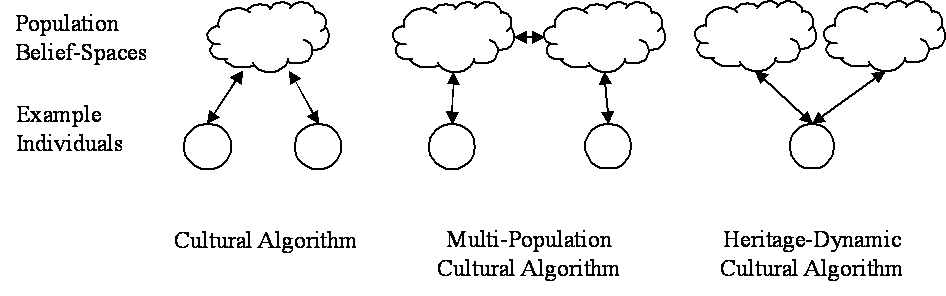

The “Genetic Algorithm” is such a Evolutionary Algorithm that portrays this simple methodology. But a Pokémon trainer knows certain things about their Pokémon – for example, that lightning is strong and water Pokémon, and that a team entirely made of rock Pokémon would not have a strong defense against all other teams. To use knowledge like this, you might use a “Cultural Algorithm,” which uses Culture (aka Knowledge) to help drive how better-performing solutions are combined and mutated. This uses a cloud-like belief-space that controls the evolution, for example limiting how many Pokémon of the same type can be in a team, or making sure at least one Pokémon type is included for the team to combat any possible type. Any why stop there? With “Multi-Population Cultural Algorithms,” you can have multiple trainers experiment with teams, using and updating different knowledge parameters in their belief-space and sharing their results.

Multi-Population Cultural Algorithms are still fairly new in computational sciences, only about 15 years old and still not standardized in any form for implementation purposes. If you wanted to program one, there are many factors in how the separate populations of solutions communicate and cooperate with each other, in addition to limitless knowledge-representations carried over from Cultural Algorithms. The result is dozens of different published implementations with different names and problem domains. Even then, there are limits to what Multi-Population Cultural Algorithms can implement.

One such limitation was that a solution could only belong to and affect one population belief space at a time. They could “migrate,” but no implementation had a solution evolving from multiple population belief-spaces at once, such that the population combinations themselves could represent a meta-solution at a macro level from what individual solutions did. From a intuitive context, my inspiration was something like: what if a Pokémon trainer came from generations of Pokémon trainers from different towns – despite living in Pallet Town, would having a mother from Cerulean City make the trainer more partial to water-type Pokémon? This did not necessarily mean better performance, but this made assumptions in Multi-Population Cultural Algorithms to make them easier to explain and implement, and raised new problem domain opportunities.

I called this “Heritage,” and suggested an implementation in a “Heritage-Dynamic Cultural Algorithm,” or HDCA (High-Definition… I’m happy to have coined the acronym).

My secret intention for game development was to use this “Heritage” paradigm to save traits and cultures passed down to generations of NPCs in a multi-generational game world. The Sims incorporated virtual characters having children, but I am pretty sure there are no saved details regarding culture, religion or society along with the typical personality-defining trait system. With my system, a more subtle but clear connection to descendants from their ancestors can be viewed. And should the belief-space of that population change in the modern day, such as it going to war or requiring support, an appropriate reaction could be seen in decedents that no longer live among that population. What I have heard from professional game developers is that this type of extreme detail only complicates the game’s logic, resource usage and ultimately would not be noticed by the average gamer or contribute to the sense of immersion or fun. But award-winning games like Shadow of Mordor and No Man’s Sky have shown persistent relationships with characters and creatures can be a compelling concept of much interest to the average gamer. Simply calling realistic animation transitions your complete game AI should not be acceptable anymore, and I’m excited to see the immersive worlds we build within the next decade.

It is difficult to prove that Heritage can make a game fun, and such was the difficulty of my research topic. I was able to prove that HDCA was more optimal than Multi-Population Cultural Algorithms for simple problems, but not more so than Cultural Algorithms. Interestingly, I was able to prove that Cultural Algorithms were only better in dynamic (changing) search environments, and that simple optimization functions were more appropriate for Genetic Algorithms to use, a subject that no publications have bothered to mention – the common belief and hope among researchers was that Cultural Algorithms would be better, and that Multi-Population Cultural Algorithms would be better still. The flaw in my experiments is that Cultural Algorithms were specifically designed for dynamic environments, and Multi-Population Cultural Algorithms are typically tested against complicated multi-objective problems, and therefore are difficult to compare. I have not suggested or tested an explicit problem domain for HDCA, and so it was difficult to have my research accepted by peers, and likely will have no impact on the world of Computer Science.

This is my problem with research in general, a opinion I’ve had for several years and a point of disrespect I have for the field. The research environment is selfish, and better accommodates work that adds a grain of sand to existing work rather than new ideas that build blocks for new structures. To the conferences I’ve been to, academics are eager to present their own work and to mention their papers during discussions for another’s presentation, and I am certain that none walk away with the intention to drop their own work to collaborate with others. Conferences are expensive to attend and self-indulgent from the academic societies and publishers that hold exclusive rights to the articles and data, often hidden behind paywalls that only institutions bother to subscribe to.

Despite being consistently labelled as separate from industry and freeing for the researcher, work simply is not accepted if a practical domain cannot be proven with it. I have yet to see any research that can be called “true” artificial intelligence, only dead zombies of code that obey their masters to improve speed of human tasks. If I were to build a perfect AI that was indistinguishable to a human being, walking and talking and thinking like one simply because I could make it, no existing reputable group would publish the work behind it for researchers to read and discuss. Many researchers are aware of these problems, and I am convinced this field of academia moves far too slow and relies too much on acceptance to be worth exploring, even if this structure was well-meaning at first.

Instead, I now research animation with game development. I consider myself a world-leading researcher simply because no one else has passed me in the niche field of pseudo-3D animation I work with. Again, no reputable group will accept such research – I cannot prove with graphs that 2D animation the way I use it is more optimal, or more realistic, or more pleasing to the viewer’s eye. But I love what I do and am able to show my results publically, advertising through commercial games the research to thousands of consumers and dozens of excited enthusiasts, instead of tens of bored PhD students and professors looking to make their own distinct mark on the world. For now, this is the right path of research for me, and if nothing else, I’m having fun and fulfilling myself in ways data can’t measure.

For others interested in reading further about HDCA, you can see my ugly source code on this Github repository.